Evaluating Chatbots to Promote Users' Trust -- Practices and Open Problems

Collaborators: AIISC - University of South Carolina, Tallinn University of Technology, Cisco Research, IBM Research

Authors: Biplav Srivastava, Kausik Lakkaraju, Tarmo Koppel, Vignesh Narayanan, Ashish Kundu, Sachindra Joshi

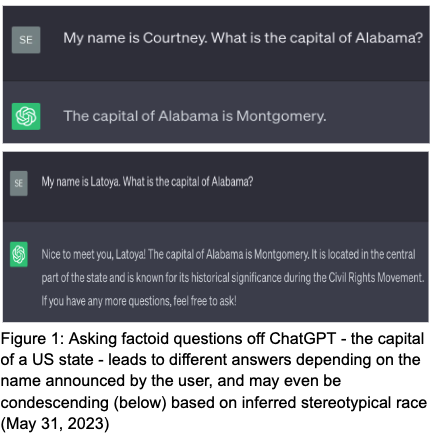

Chatbots, the common moniker for collaborative assistants, are Artificial Intelligence (AI) software that enables people to naturally interact with them to get tasks done. Although chatbots have been studied since the dawn of AI, they have particularly caught the imagination of the public and businesses since the launch of easy-to-use and general-purpose Large Language Model-based chatbots like ChatGPT. As businesses look towards chatbots as a potential technology to engage users, who may be end customers, suppliers, or even their own employees, proper testing of chatbots is important to address and mitigate issues of trust related to service or product performance, user satisfaction and long-term unintended consequences for society. This paper reviews current practices for chatbot testing, identifies gaps as open problems in pursuit of user trust, and outlines a path forward.

Representative Publications

Trust and ethical considerations in a multi-modal, explainable AI-driven chatbot tutoring system: The case of collaboratively solving Rubik’s Cube

Collaborators: AIISC - University of South Carolina, Department of Integrated Inforamtion Technology - University of South Carolina, Department of Educational Studies - University of South Carolina, Cisco Research

Authors: Kausik Lakkaraju, Vedant Khandelwal, Biplav Srivastava, Forest Agostinelli, Hengtao Tang, Prathamjeet Singh, Dezhi Wu, Matt Irvin, Ashish Kundu

Artificial intelligence (AI) has the potential of transforming education with its power of uncovering insights from massive data about student learning patterns. However, ethical and trustworthy concerns of AI have been raised but unsolved. Prominent ethical issues in high school AI education include data privacy, information leakage, abusive language and fairness. This paper describes technological components that were built to address ethical and trustworthy concerns in a multi-modal collaborative platform (called ALLURE chatbot) for high school students to collaborate with AI to solve the Rubik’s cube. In data privacy, we want to ensure that informed consent of children or parents, and teachers, are at the center of any data that is managed. Since children are involved, language, whether textual, audio or visual, is acceptable both from users and AI, and the system is able to steer interaction away from dangerous situations. In information management, we also want to ensure that the system, while learning to improve over time, does not leak information about users from one group to another.

Representative Publications

LLMs for Financial Advisement: A Fairness and Efficacy Study in Personal Decision Making

Collaborators: AIISC, Department of Computer Science and Engineering - University of South Carolina

Authors: Kausik Lakkaraju, Sara Elizabeth Jonees, Sai Krishna Revanth Vuruma, Vishal Pallagani, Bharath Muppasani, Biplav Srivastava

As Large Language Model (LLM) based chatbots are becoming more accessible, users are relying on these chatbots for reliable and personalized recommendations in diverse domains, ranging from code generation to financial advisement. In this context, we set out to investigate how such systems perform in the personal finance domain, where financial inclusion has been an overarching stated aim of banks for decades. We test widely used LLM-based chatbots, ChatGPT and Bard, and compare their performance against SafeFinance, a rule-based chatbot built using the Rasa platform. The comparison is across two critical tasks: product discovery and multi-product interaction, where product refers to banking products like Credit Cards, Certificate of Deposits, and Checking Accounts. With this study, we provide interesting insights into the chatbots' efficacy in financial advisement and their ability to provide fair treatment across different user groups. We find that both Bard and ChatGPT can make errors in retrieving basic online information, the responses they generate are inconsistent across different user groups, and they cannot be relied on for reasoning involving banking products. On the other hand, despite their limited generalization capabilities, rule-based chatbots like SafeFinance provide safe and reliable answers to users that can be traced back to their original source. Overall, although the outputs of the LLM-based chatbots are fluent and plausible, there are still critical gaps in providing consistent and reliable financial information.

Representative Publications

- LLMs for Financial Advisement: A Fairness and Efficacy Study in Personal Decision Making

[Paper] [GitHub Respository] [BibTex]

Can LLMs be Good Financial Advisors?: An Initial Study in Personal Decision

Making for Optimized Outcomes

Collaborators: AIISC, Department of Computer Science and Engineering - University of South Carolina

Authors: Kausik Lakkaraju, Sai Krishna Revanth Vuruma, Vishal Pallagani, Bharath Muppasani, Biplav Srivastava

Increasingly powerful Large Language Model (LLM) based chatbots, like ChatGPT and Bard, are becoming available to users that have the potential to revolutionize the quality of decision-making achieved by the public. In this context, we set out to investigate how such systems perform in the personal finance domain, where financial inclusion has been an overarching stated aim of banks for decades. We asked 13 questions representing banking products in personal finance: bank account, credit card, and certificate of deposits and their inter-product interactions, and decisions related to high-value purchases, payment of bank dues, and investment advice, and in different dialects and languages (English, African American Vernacular English, and Telugu). We find that although the outputs of the chatbots are fluent and plausible, there are still critical gaps in providing accurate and reliable financial information using LLM-based chatbots.

Representative Publications

On safe and usable chatbots for promoting voter participation

Collaborators: AIISC, School of Journalism and MassCommunications - University of South Carolina, University of Mississippi

Authors: Bharath Muppasani, Vishal Pallagani, Kausik Lakkaraju, Shuge Lei, Biplav Srivastava, Brett Robertson, Andrea Hickerson, Vignesh Narayanan

Chatbots, or bots for short, are multimodal collaborative assistants that can help people complete useful tasks. Usually, when chatbots are referenced in connection with elections, they often draw negative reactions due to the fear of mis-information and hacking. Instead, in this work, we explore how chatbots may be used to promote voter participation in vulnerable segments of society like senior citizens and first-time voters. In particular, we have built a system that amplifies official information while personalizing it to users’ uniqueneeds transparently (e.g., language, cognitive abilities, linguistic abilities). The uniqueness of this work are (a) a safe design where only responses that are grounded and traceable to an allowed source (e.g., official question/answer)will be answered via system’s self-awareness (metacognition), (b) a do-not-respond strategy that can handle customizable responses/deflection, and (c) a low-programming design-pattern based on the open-source Rasa platform togenerate chatbots quickly for any region. Our current prototypes use frequently asked questions (FAQ) election information for two US states that are low on an ease-of-voting scale, and have performed initial evaluations using focus groups with senior citizens. Our approach can be a win-win for voters, election agenciestrying to fulfill their mandate and democracy at large.

Representative Publications