Generalized Planning

Key Idea

Our research in Generalized Planning focuses on building models and frameworks that reason, plan, and adapt across diverse tasks. We develop transformer-based architectures for sequential decision-making, design agentic systems inspired by Thinking, Fast and Slow, and create knowledge engineering ontologies that support structured reasoning.

Quick Start

- To get started with how we fine-tune LLMs for plan generation, explore our lab materials on GitHub (2024). For a detailed comparison of different language model architectures and their effectiveness in generating plans, read our benchmarking paper (2023).

- Explore our SOFAI Lab library (2025) to understand our architecture inspired by the principles of Thinking, Fast and Slow, and see how it improves planning performance in this paper (2024).

- Learn how we are building a comprehensive Plan Ontology to represent and leverage planning knowledge for downstream applications. Explore the project details and resources on our website.

-

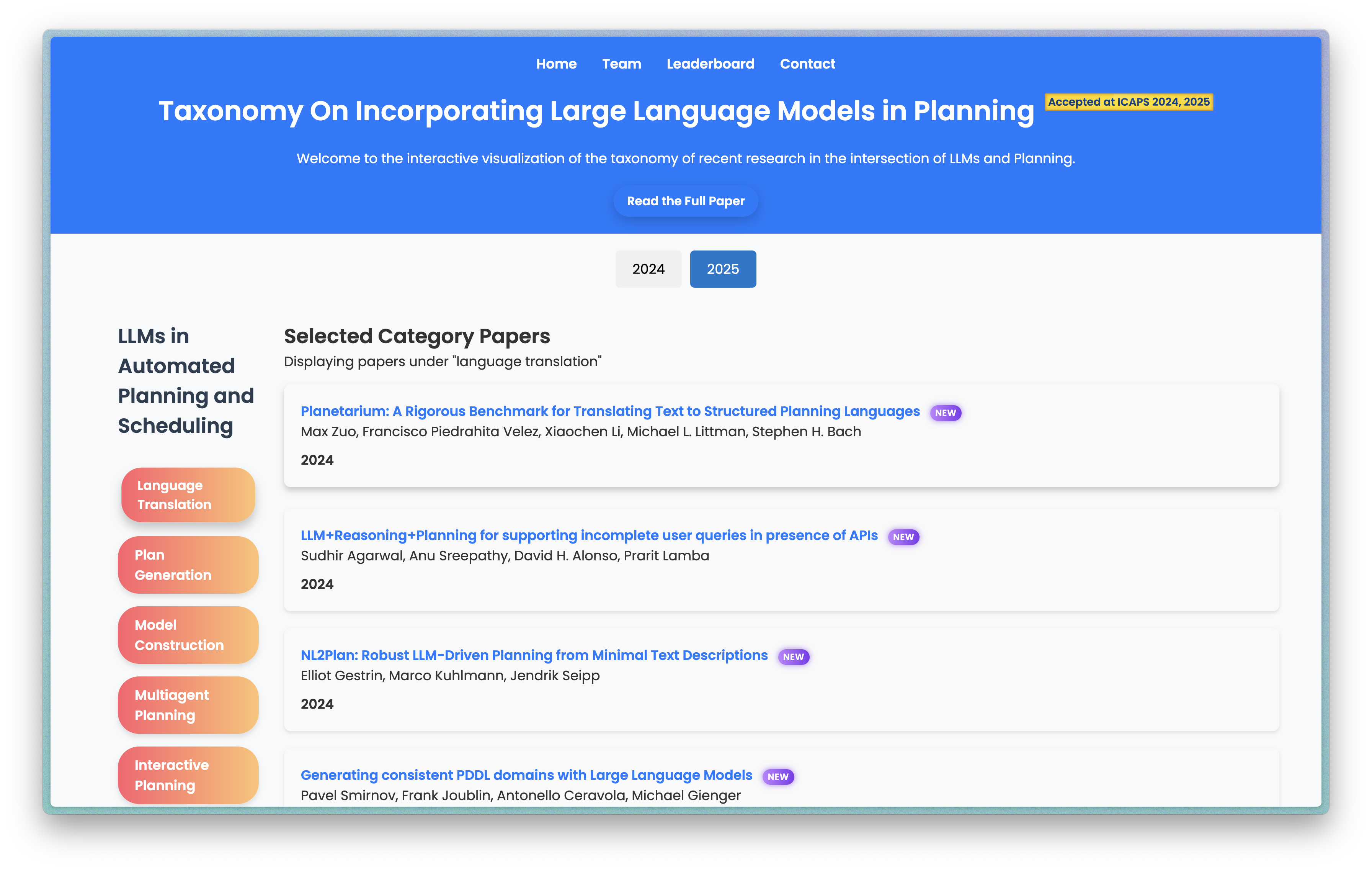

The LLM Planning Visualization Tool offers an interactive way to explore the evolution and landscape of Large Language Models in planning research. This tool, part of the NAIRR PlanFM project, provides dynamic insights into research trends. (Click image to enlarge)

Foundation Models for Planning

Collaborators: Vishal Pallagani,

Bharath Muppasani,

Biplav Srivastava,

Francesca Rossi,

Lior Horesh,

Keerthiram Murugesan,

Kaushik Roy, Amit Sheth

Large Language Models (LLMs) have been the subject of active research, significantly

advancing the field of Natural Language Processing (NLP). From BERT to BLOOM, LLMs

have surpassed state-of-the-art results in various natural language tasks such as

question-answering, summarization, and text generation. Many ongoing efforts focus on

understanding LLMs' capabilities, including their knowledge of the world, syntax, and

semantics. However, extending the textual prowess of LLMs to symbolic reasoning has

been slow and predominantly focused on tackling problems related to the mathematical

field. In this work, we explore the use of LLMs for automated planning - a branch of

AI concerned with the realization of action sequences (plans) to achieve a goal,

typically executed by intelligent agents, autonomous robots, and unmanned vehicles.

SubProjects

PlanFM – Our NSF EAGER–funded effort demonstrates a compact, from-scratch foundation model tailored for planning-like tasks. It tackles resource barriers and drives a transparent, bottom-up development methodology. Learn more about PlanFM →

Representative Publications

-

Revisiting LLMs in Planning from Literature Review: a Semi-Automated Analysis

Approach and Evolving Categories Representing Shifting Perspectives

The 35th International Conference on Automated Planning and Scheduling (ICAPS), 2025

[Paper] [BibTex] [Visualization Tool] [Github] -

On the Prospects of Incorporating Large Language Models (LLMs) in Automated Planning

and Scheduling (APS)

The 34th International Conference on Automated Planning and Scheduling (ICAPS), 2024

[Paper] [BibTex] [Visualization Tool] [Github] -

The Case for Developing a Foundation Model for Planning-like Tasks from Scratch

Planning and Reinforcement Learning (PRL) Workshop at ICAPS, 2024

[Paper]

-

Understanding the Capabilities of Large Language Models for Automated Planning

Preprint, 2023

[Paper] [BibTex] -

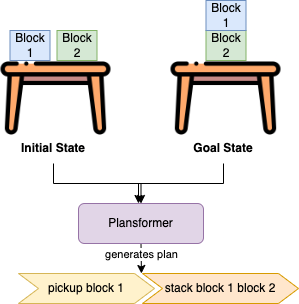

Plansformer Tool: Demonstrating Generation of Symbolic Plans Using Transformers

Proc. of the Thirty-Second International Joint Conference on Artificial Intelligence (IJCAI) Demonstrations Track, 2023

[Paper][Tool Website] [Demo][BibTex] -

Plansformer: Generating Symbolic Plans using Transformers

Workshop on Generalization in Planning (GenPlan) at NeurIPS, 2023

[Paper] [BibTex]

Representative Activities

-

Harnessing Large Language Models for Planning: A Lab on Strategies for Success and

Mitigation of Pitfalls

AAAI Conference on Artificial Intelligence, 2024

[Github] [Website] [BibTex]

Representative Patents

-

P202203822US01 Plansformer - a transformer planner

Francesca Rossi, Lior Horesh, Vishal Pallagani, Biplav Srivastava, Andrea Loreggia

-

USC 1702 Unsupervised Plan Summarization to Improve Planner Performance and

Human Interpretability

Biplav Srivastava, Vishal Pallagani

Planning using Fast and Slow AI Architecture

Collaborators: Vishal Pallagani,

Biplav Srivastava,

Francesca Rossi,

Lior Horesh,

Keerthiram Murugesan

The newly introduced idea of Fast and Slow AI (SOFAI) architecture is inspired from

the cognitive theories mentioned by Daniel Kahneman in Thinking Fast and Slow. This

research project aims to build AI-supported machines that can

- make decisions with emergent behaviors similar to the human ones, and

- support human decision making through nudging and explanations.

To achieve these goals, the team is designing and building a cognitive architecture to mimic these two broad modalities in a machine. We adapt the SOFAI architecture to solving planning domains where incoming problems are solved by either system 1 (or ”fast” - S1) agents, also called solvers, that react by exploiting either past experience (case-based reasoning) or using a learnt model called as Plansformer, or by system 2 (or ”slow” - S2) agents, that are deliberately activated when there is the need to reason and search for optimal solutions beyond what is expected from the system 1 agent. SOFAI architecture with Plansformer as S1 solves more problems than the symbolic planner (FastDownward, which is also used as S2). Visit the dedicated website for more details on SOFAI.

Representative Publications

-

Plan-SOFAI: A Neuro-Symbolic Planning Architecture

Neuro-Symbolic Learning and Reasoning in the era of Large Language Models (NuCLeaR) Workshop at AAAI 2024

[Paper] [BibTex] -

Fast and Slow Planning

Preprint

[Paper] [BibTex] -

Epistemic Planning in a Fast and Slow Setting

AAAI 2022 Fall Symposium Series, Thinking Fast and Slow and Other Cognitive Theories in AI track

[Paper] [BibTex]

Representative Activities