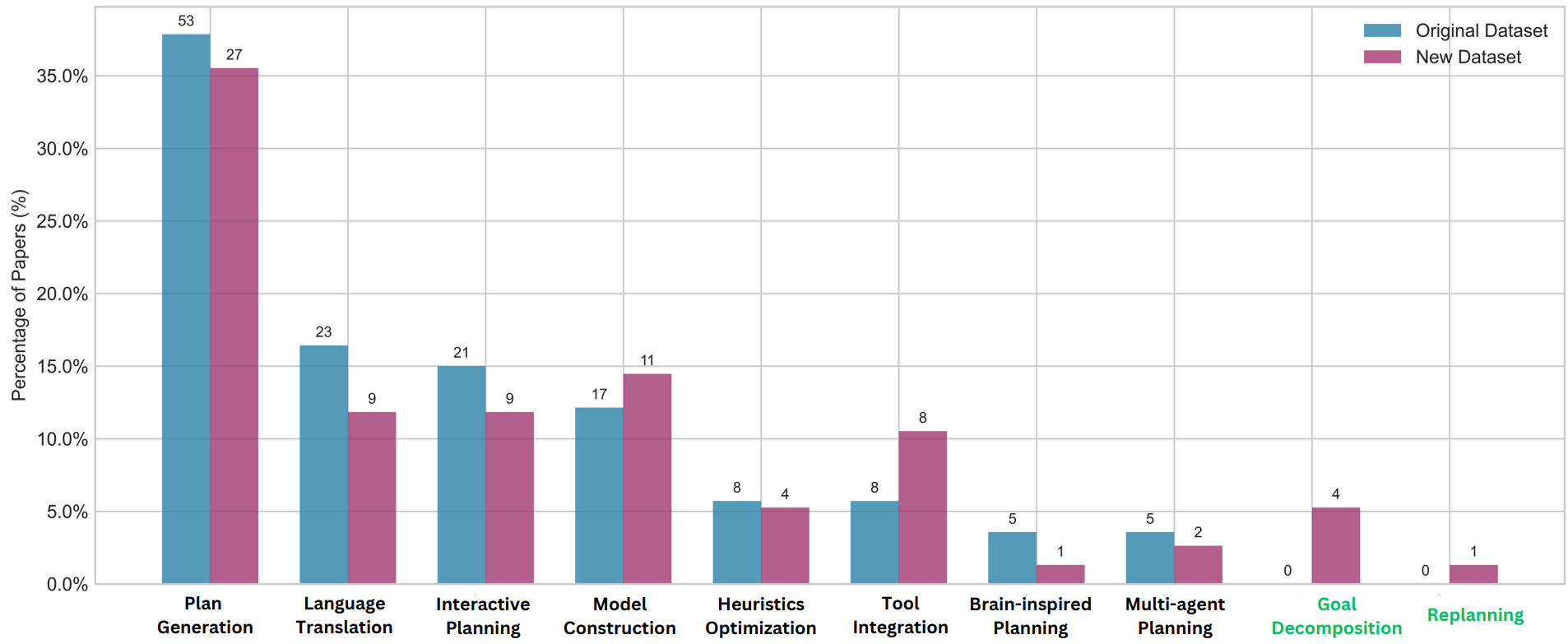

The histogram below compares how research focus has shifted between our two datasets. Notable trends include the continued dominance of Plan Generation (though decreasing in percentage), significant growth in Model Construction and Tool Integration, and the emergence of new categories addressing task structuring and adaptability. These shifts reflect broader recognition that LLMs face fundamental challenges in autonomous planning but excel when integrated within frameworks with external verifiers and specialized tools.