Collaborative Assistants

Key Idea

Our work in Collaborative Assistants focuses on creating trustworthy AI systems that work synergistically with humans in sensitive domains. We develop neuro-symbolic architectures combining neural networks with symbolic reasoning, build safety-focused chatbot frameworks, and create adaptive dialog systems using planning and reinforcement learning techniques.

Quick Start

- Intended to help medical students improve interviewing skills with patients by practicing with a chatbot. Try our SafeChat HIV/AIDS Information Tool yourself: fet reliable information on HIV/AIDS (prevention, treatment, living with it) from trusted sources like UNAIDS. [Try it now]

- Intended for chatbot developers. Explore SafeChat framework for building trustworthy domain-specific chatbots [Paper] [GitHub]

News

SafeChat

Collaborators: Vishal Pallagani,

Kausik Lakkaraju, Bharath Muppasani,

Biplav

Srivastava

SafeChat is an architecture that aims to provide a safe and secure

environment for users to interact with AI chatbots in trust-sensitive

domains. It uses a combination of neural (learning-based, including

generative AI) and symbolic (rule-based) methods, together called a

neuro-symbolic approach, to provide known information in easy-to-use

consume forms that are adapted from user interactions (provenance).

The chatbots generated are scalable, quick to build and is evaluated

for trust issues like fairness, robustness and appropriateness of responses.

Representative Publications

- A Vision for Reinventing Credible Elections with Artificial Intelligence

Thirty-Ninth AAAI Conference on Artificial Intelligence (AAAI-25), Philadelphia, USA, Feb 2025

Biplav Srivastava

[Paper] [Slides]

- Do Voters Get the Information They Want? Understanding Authentic Voter FAQs in the US

and How to Improve for Informed Electoral Participation

TrustNLP workshop at Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL 2025), Albuquerque, USA, May 2025

Vipula Rawte, Deja N Scott, Gaurav Kumar, Aishneet Juneja, Bharat Sowrya Yaddanapalli, Biplav Srivastava,

[Paper]

- Disseminating Authentic Public Messages using Chatbots - A Case Study with

ElectionBot-SC to Understand and Compare Chatbot Behavior for Safe Election

Information in South Carolina

AI for Public Mission Workshop at Thirty-Ninth AAAI Conference on Artificial Intelligence (AAAI-25), Philadelphia, USA, Feb 2025

Nitin Gupta, Vansh Nagpal, Bharath Muppasani, Kausik Lakkaraju, Sara Jones, Biplav Srivastava

[Paper]

- On Safe and Usable Chatbots for Promoting Voter Participation

AAAI AI Magazine, 2023

Bharath Muppasani, Vishal Pallagani, Kausik Lakkaraju, Shuge Lei, Biplav Srivastava, Brett Robertson, Andrea Hickerson, Vignesh Narayanan

[Paper] - LLMs for Financial Advisement: A Fairness and Efficacy Study in Personal Decision

Making

4th ACM International Conference on AI in Finance: ICAIF'23, New York, 2023

Kausik Lakkaraju, Sara Rae Jones, Sai Krishna Revanth Vuruma, Vishal Pallagani, Bharath C Muppasani and Biplav Srivastava

[Paper] [Slides] - Trust and ethical considerations in a multi-modal, explainable AI-driven chatbot

tutoring system: The case of collaboratively solving Rubik’s Cube

ICML 2023 TEACH Conversational AI Workshop, Hawaii, 2023

Kausik Lakkaraju, Vedant Khandelwal, Biplav Srivastava, Forest Agostinelli, Hengtao Tang, Prathamjeet Singh, Dezhi Wu, Matt Irvin, Ashish Kundu

[Paper]

Representative SafeChat Framework Usage and Instances

- Use of SafeChat in teaching how to build chatbots in CSCE 580 (Fall 2023; 25 students).

- Use of SafeChat by student in CSCE 580 (Fall 2023) to create chatbot interface for Water Quality Decider System.

- Use of SafeChat in creating Garnet n Talk, a chatbot designed to answer questions about the University of South Carolina. This project was awarded Best Idea in the USC Spring 2024 Hackathon.

- Use of SafeChat for creating chatbot in election for (Mississippi).

- Use of SafeChat for creating ALLURE chatbot in education.

Generic Information

Retrieval Chatbot using Planning & RL

Collaborators: Vishal Pallagani,

Biplav

Srivastava

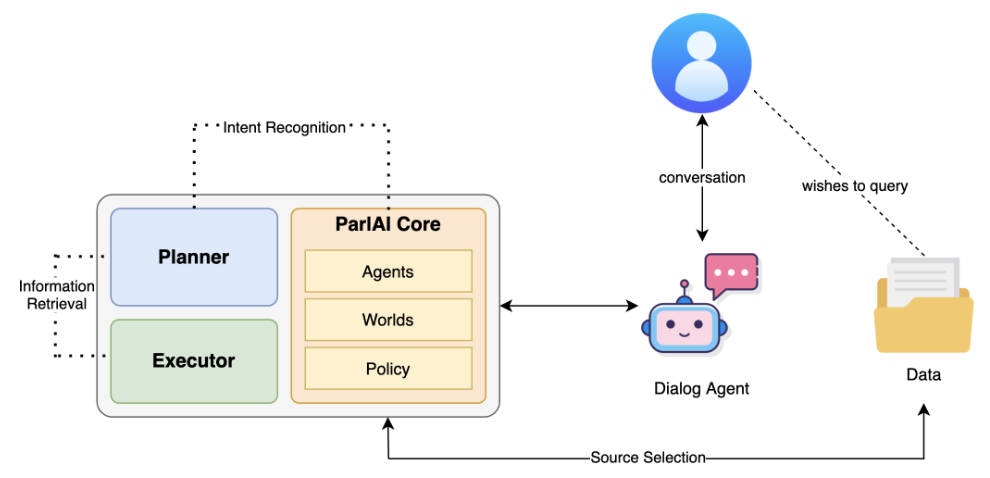

In this body of work, we address the challenge of enabling users to effectively search large datasets, such

as product catalogs and open data, using natural language. Many users find it difficult to interact with

these datasets due to unfamiliarity with query languages. Our system allows users to retrieve information

through a dialog-based interface that adapts to the underlying structure of the data, making it versatile

across various domains. By incorporating planning techniques and reinforcement learning (RL), the system can

learn and optimize its search strategies over time. We demonstrate the system’s effectiveness using datasets

such as UNSPSC and ICD-10, highlighting its capability to enhance information retrieval through the combined

use of planning and RL.

Representative Publications

- A generic dialog agent for information retrieval based on automated planning within a reinforcement

learning platform

Bridging the Gap Between AI Planning and Reinforcement Learning (PRL) Workshop at ICAPS, 2021

[Paper] [BibTex] [Poster] [Github] - PRUDENT-A Generic Dialog Agent for Information Retrieval That Can Flexibly Mix Automated Planning and

Reinforcement Learning

ICAPS Demonstration Track, 2021

[Paper] [BibTex] [Demo] [Github]

For more details, visit: https://sites.google.com/site/biplavsrivastava/research-1/dialog